Data warehouses are becoming increasingly popular as many businesses, nonprofits, and municipal organizations realize the value of storing their current and historic data in a dynamic and useful way.

The data warehouse is the central place where all of the different technical systems across the organization meet to store their data, from retail processing to customer management software to marketing campaigns and more. Creating the right data warehouse for every business is a hard ask, which is why there are so many different ways to do it.

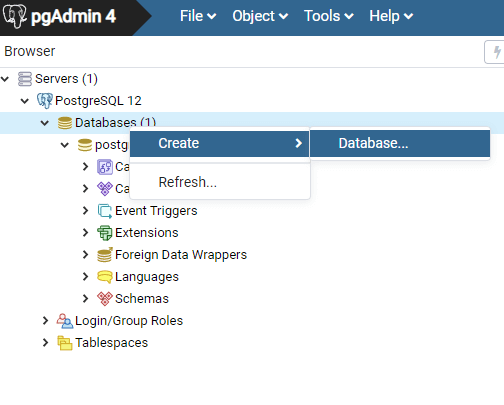

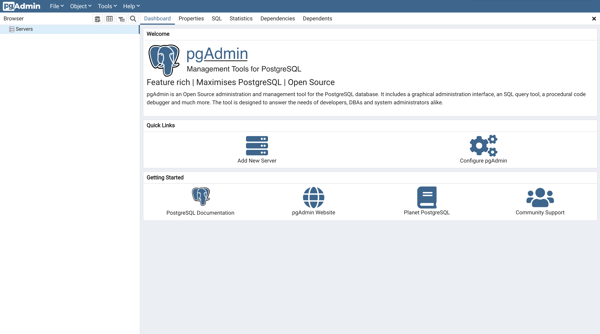

If you've heard of PostgreSQL, there's a reason. It's a useful and common data warehouse tool maintained by an active community. It can also handle more than just one kind of data processing, which makes it a pretty compelling option.

The 2 types of data processing

There are two types of critical data processing systems primarily used today: Online Transaction Processing (OLTP) and Online Analytical Processing (OLAP). They each serve different purposes and should be technically separate in any organization.

OLTP is almost exactly what it sounds like: a database responsible for operational transaction processing. It must be fast, dynamic, and responsive to database failure with a backup plan.

This kind of processing is used for e-commerce purchases, bank account transactions, retail purchases, and the like. Ideally very little data is saved in this database, so that the transaction response times can remain nimble. Historic data should get sent along to the next process, so OLTP shouldn't be part of a data warehouse.

OLAP is different: its purpose is to save historical data, and maintain the Extract, Transform, Load (ELT) processes that are used for data analysis. The OLAP system is at the heart of critical business decisions, offering up data related to day-to-day performance and long-term organizational stability.

This is a slower form of data processing, but part of that is based on how much historical data is stored in an OLAP system. This is the system you should find as the backbone of the data warehouse.

PostgreSQL as a data warehouse: The benefits

As I said before, an excellent feature of PostgreSQL is its ability to be used for both OLTP and OLAP. This makes it easier for the databases that are using OLAP to store the data to speak to the databases using OLTP to create the latest data. This may be the primary reason that PostgreSQL is so popular, but let's focus on why it's used heavily in OLAP as a data warehouse.

It's extremely compatible

It's extremely compatible

PostgreSQL works with almost any kind of programming language that's used in modern data extraction, from Python to .NET to Java and more.

Postgres is free

Yes, I said it. It’s free and easy to install and try right away, and if you're anything like me you love to do that. Never underestimate the power of immediate experimentation.

It's basically SQL

Someone out there is going to read that subheading and be upset with me, but here's the thing: I've never encountered a situation where I had to question whether or not the query I wrote using my SQL background would run in a PostgreSQL database. It just works, and most everybody in the data world knows a bit of SQL.

Postgres as a DW: Limitations and challenges

Although PostgreSQL is a popular and useful data warehouse solution for many reasons, there are also challenges that come with using it, especially at an enterprise level scale. Here are just a few.

Version bugs and dependencies

Whatever version of PostgreSQL a data team may choose will have to be compatible with all of the other programming and hardware dependencies they work with. Most of the time this isn't a problem, but depending on the data transformation framework you layer on top of your data warehouse, it could cause errors for engineers and compatibility confusion.

Security vulnerabilities

Open-source solutions are fantastic, and frankly they have changed the programming and engineering worlds when it comes to technological innovation and the opportunity for community development.

However, open source solutions are more vulnerable to software attacks because the source code is available to the public. According to research from RiskSense, every year these code injection attacks happen at an increasing rate.

Load-balancing at scale is tricky

No matter the level of the business, organizations large and small find themselves in need of fast, reliable transactional processing and data analytics processing. Today this usually means load-balancing between several different databases which can execute read-write processes in consistent ways.

With PostgreSQL, that quickly becomes a complicated and multifaceted issue for any organization using it. There are eight different ways of handling this in the official PostgreSQL documentation alone. It can be done, but it's tough, and even tougher the more data you need to process.

Support for urgent technical issues is lacking

What happens if you have a PostgreSQL production issue that needs immediate technical resolution, but your engineers aren't sure how to solve the problem? The solution is going to involve a lot of panicked Googling along with trial and error fixes. Perhaps you can ask the community for help and wait for an answer, but sometimes that never comes.

Other data warehouse options

So what else do enterprises use for a data warehouse? A host of other options are available, but it can often be hard to find the right one. Two of the most popular solutions are Google BigQuery and Snowflake.

BigQuery

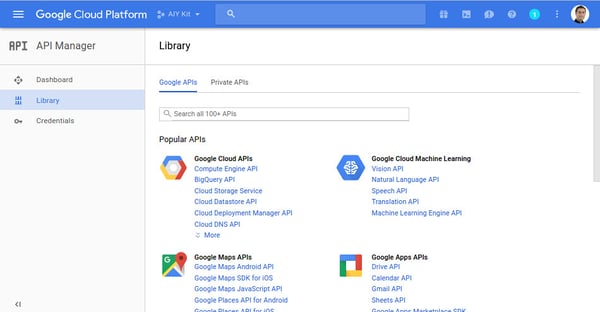

Google's BigQuery product is a highly configurable and flexible data processing tool suite that uses SQL as its backbone. What does BigQuery do that PostgreSQL can't?

First, the suite is a built-in cloud server platform that doesn't require local installation. Though it's a paid service for any storage, processing, or scheduling server jobs, BigQuery can do a lot of different things with the data you have, like automatic load-balancing on Google's serverless framework so less manual configuration is required.

However, just because it has a lot of functionality doesn't mean it's stable. BigQuery might be fine for an organization with varied data workloads and engineers with time to explore the BigQuery user interface, but Google Cloud Platform—and Google Cloud Console in particular—are pretty complicated. That, and BigQuery doesn't guarantee a connection to any external source yet, so there are a couple of limitations.

Snowflake

Snowflake is another data warehouse tool that has become increasingly popular since it emerged in 2014. It's well-known for having a dynamic architecture that can handle a lot of data processing at scale. Automatic load-balancing and semi-serverless processes are just a couple of the perks to Snowflake's cloud warehouse solutions.

Snowflake also connects to dozens of different certified data interfaces, which may explain why it's another paid service. It's scalable, fast, and secure.

Sounds amazing, right? It is, if you are a skilled and seasoned SQL warrior. Snowflake is great, but configuration and maintenance take the hands of data architects who have the expertise and the knowledge to complete the work.

If you're like me and you've learned SQL along with another half a dozen languages, you know that it takes some time to work in a primary language. If someone has the engineers on hand to configure Snowflake, it's well worth the complex technical effort. But it will be just that: complex technical effort.

A simpler alternative

You can see why so many organizations turn to a free community tool like PostgreSQL, which can be used as a data warehouse software to a point. But when working with Postgres as a data warehouse, there are security vulnerabilities, version compatibility problems, issues with scaling, and a lot of technical programming to do for things like load-balancing. That's a lot of overhead before your business analytics can be processed efficiently.

There are data warehouse tools out there that have the best of both worlds: lots of data integrations for that sweet OLAP we all need, and an easier interface that doesn't take a lot of configuration. This is where a solution like Panoply comes in.

Panoply is an all-in-one ETL and data warehouse tool that requires no code to set up and standard SQL for querying your data. Almost anyone can manage data in a UI that allows the user to choose which data integration they'd like to connect, from Salesforce to Amazon Web Services to SQL Server and more.

Part of these connections is also the added security in Panoply that complies with critical security protocols like HIPAA and SOC2. It also allows the user to directly view databases and query data without leaving the UI, as well as connect to a host of analytical and BI tools for reporting and visualization.

Wrap up

There are a lot of reasons why someone might choose to program their own data warehouse from scratch or pick a paid tool that also requires specialized configuration.

Rarely does a business calculate the human costs of overhead, but if they did, they'd see exhausted data engineers who are tired of panicking when something goes wrong in production working overtime. This costs everyone time, stress, and likely money.

So if you’re looking for an easier way to get all your data in one place, why not instead use a tool like Panoply that handles data chores on the backend so you can get the real data analytics work done?